Mac pro M2 “本地部署chatGPT”_mac m2本地运行qwen7bchatCSDN博客 - Subreddit to discuss about llama, the large language model created by meta ai. Cache import load_prompt_cache , make_prompt_cache , save_prompt_cache I am quite new to finetuning and have been planning to finetune the mistral 7b model on the shp dataset. Much like tokenization, different models expect very different input formats for chat. This project is heavily inspired. You should also read this: Open House Flyer Templates

Neuralchat7b Can Intel's Model Beat GPT4? - This project is heavily inspired. To shed some light on this, i've created an interesting project: This is a repository that includes proper chat templates (or input formats) for large language models (llms), to support transformers 's chat_template feature. Subreddit to discuss about llama, the large language model created by meta ai. Im trying to use a template to predictably. You should also read this: Bernie Meme Template

Pedro Cuenca on Twitter "Llama 2 has been released today, and of - Chat with your favourite models and data securely. This is the reason we added chat templates as a feature. We compared mistral 7b to. This is a repository that includes proper chat templates (or input formats) for large language models (llms), to support transformers 's chat_template feature. Geitje comes with an ollama template that you can use: You should also read this: Family Tree Template Google

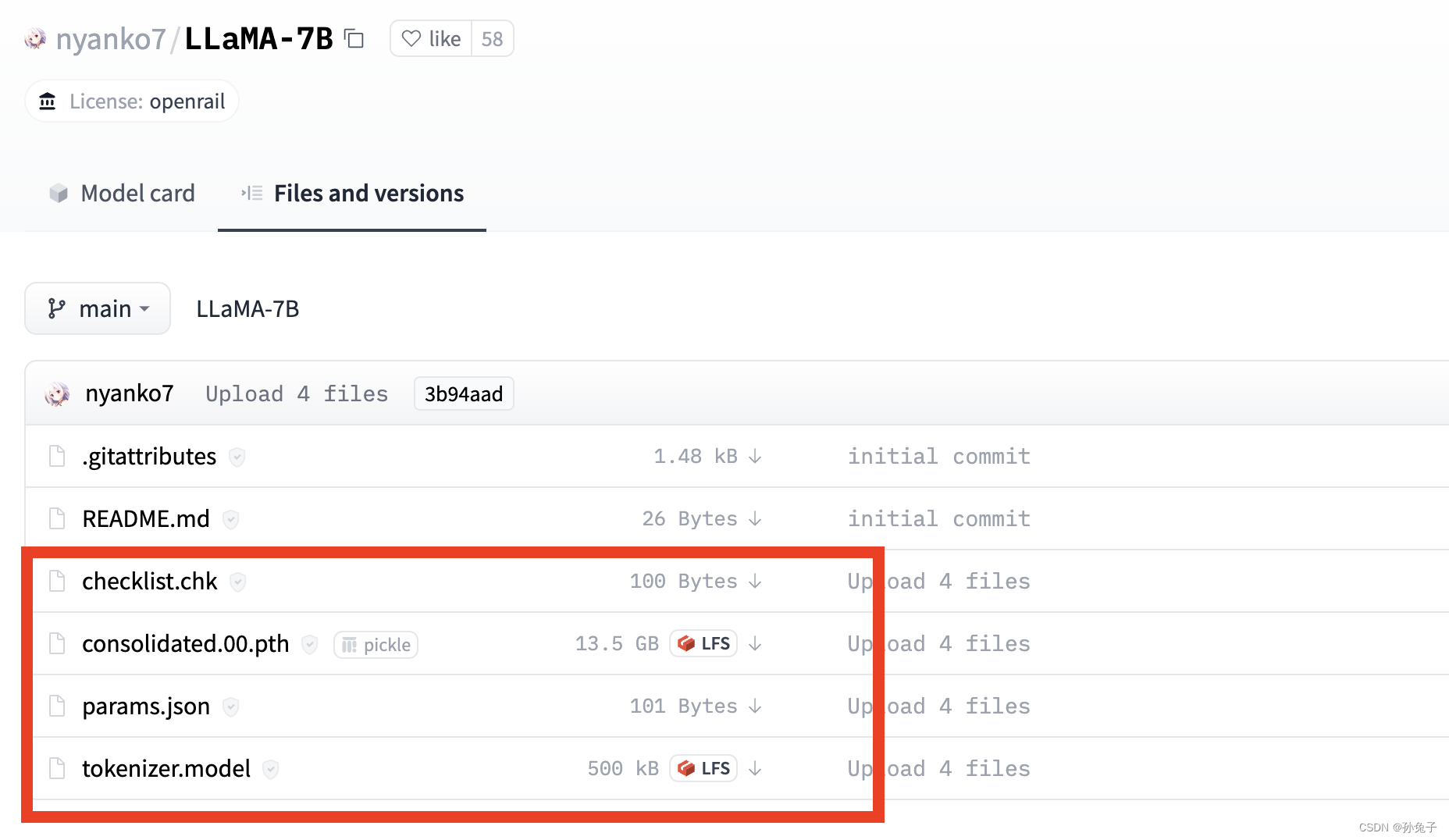

huggyllama/llama7b · Add chat_template so that it can be used for chat - Much like tokenization, different models expect very different input formats for chat. This project is heavily inspired. This is a repository that includes proper chat templates (or input formats) for large language models (llms), to support transformers 's chat_template feature. Im trying to use a template to predictably receive chat output, basically just the ai to fill. Customize the chatbot's. You should also read this: Baseball Line Score Template

MPT7B A Free OpenSource Large Language Model (LLM) Be on the Right - This is the reason we added chat templates as a feature. We compared mistral 7b to. They also focus the model's learning on relevant aspects of the data. They specify how to convert conversations, represented as lists of messages, into a single tokenizable string in the format that the model expects. Cache import load_prompt_cache , make_prompt_cache , save_prompt_cache You should also read this: Cap Cut Template

GitHub DecXx/Llama27bdemo This Is demonstrates model [Llama27b - To shed some light on this, i've created an interesting project: We compared mistral 7b to. From mlx_lm import generate , load from mlx_lm. Subreddit to discuss about llama, the large language model created by meta ai. Customize the chatbot's tone and expertise by editing the create_prompt_template function. You should also read this: Offensive Play Call Sheet Template Excel

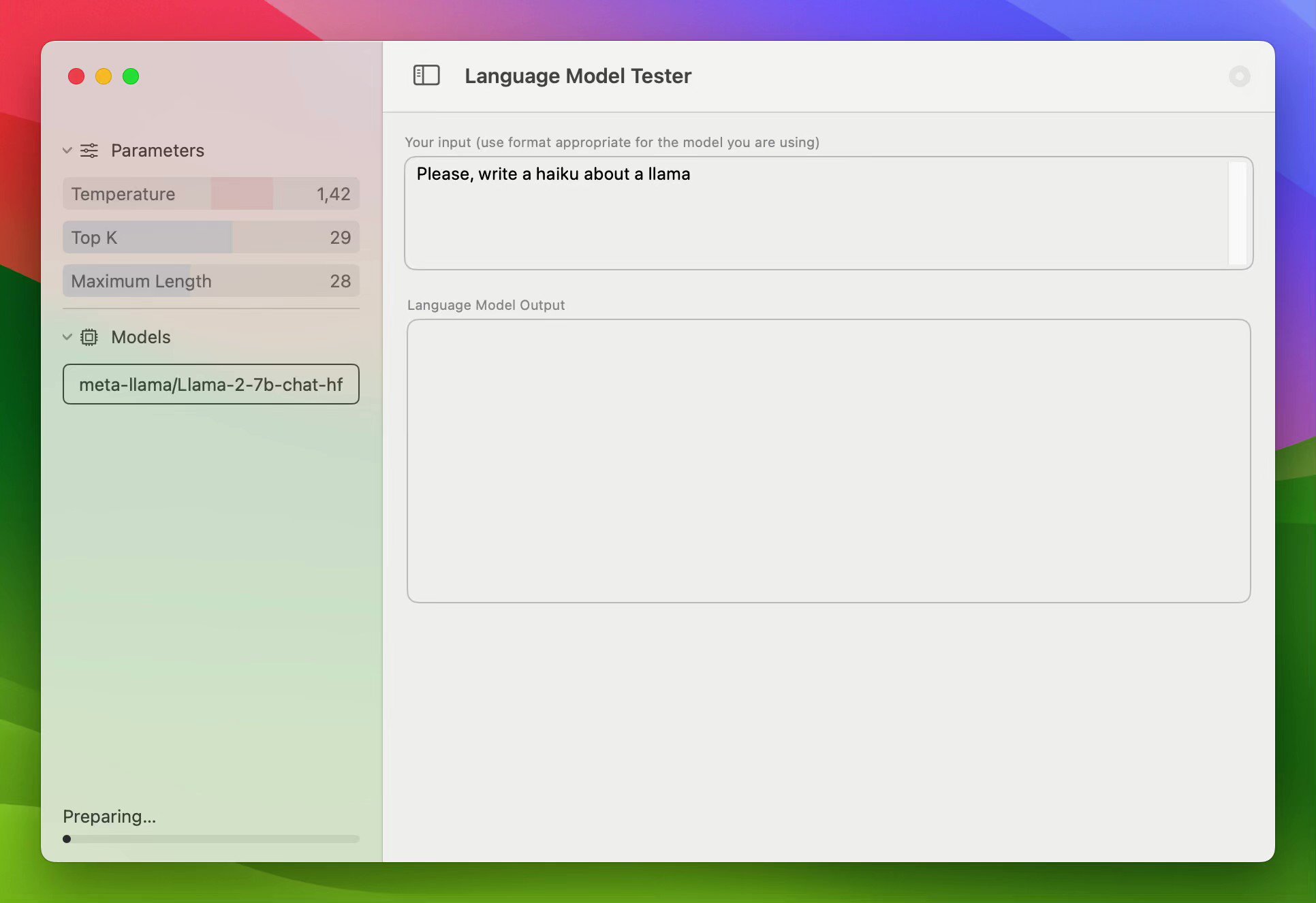

Unlock the Power of AI Conversations Chat with Any 7B Model from - I am quite new to finetuning and have been planning to finetune the mistral 7b model on the shp dataset. Upload images, audio, and videos by dragging in the text input, pasting, or clicking here. Essentially, we build the tokenizer and the model with from_pretrained method, and we use generate method to perform chatting with the help of chat template. You should also read this: Home Remodel Template

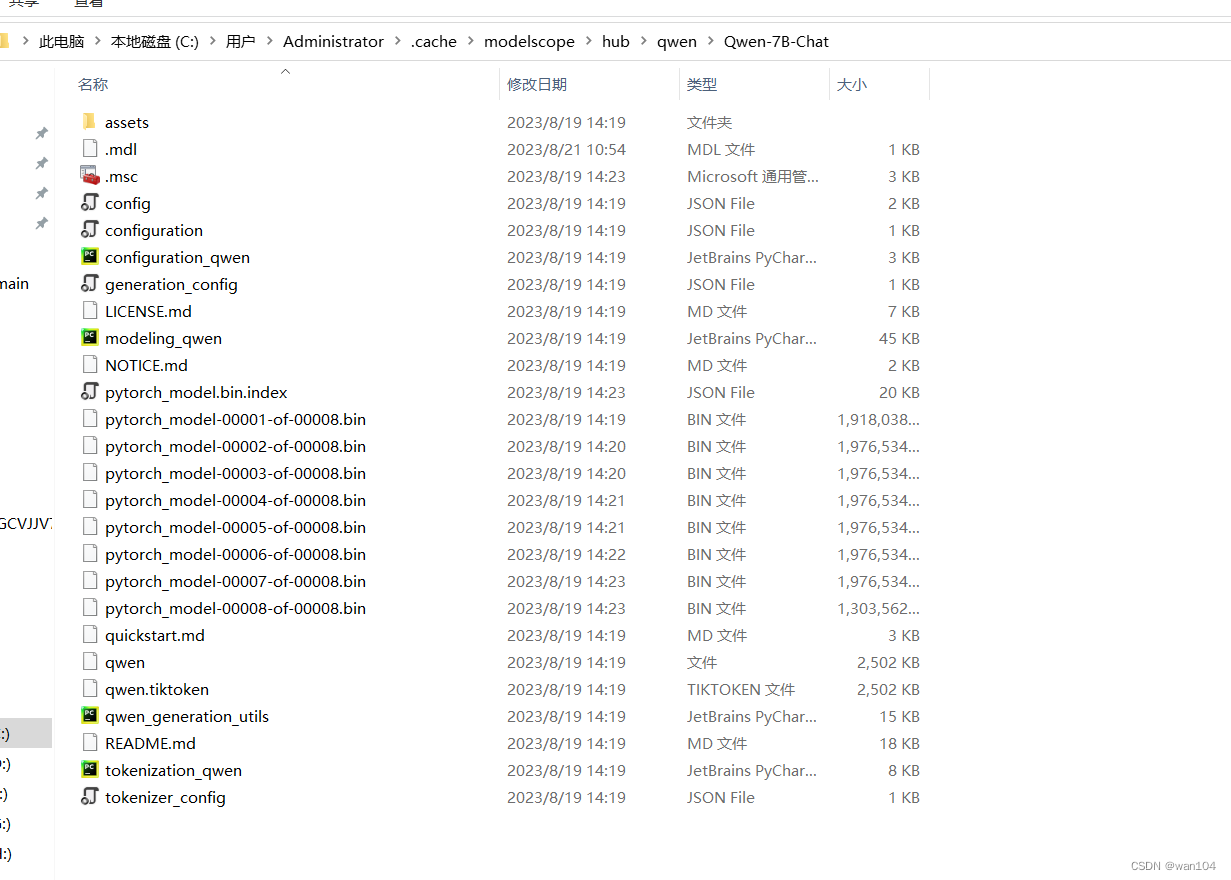

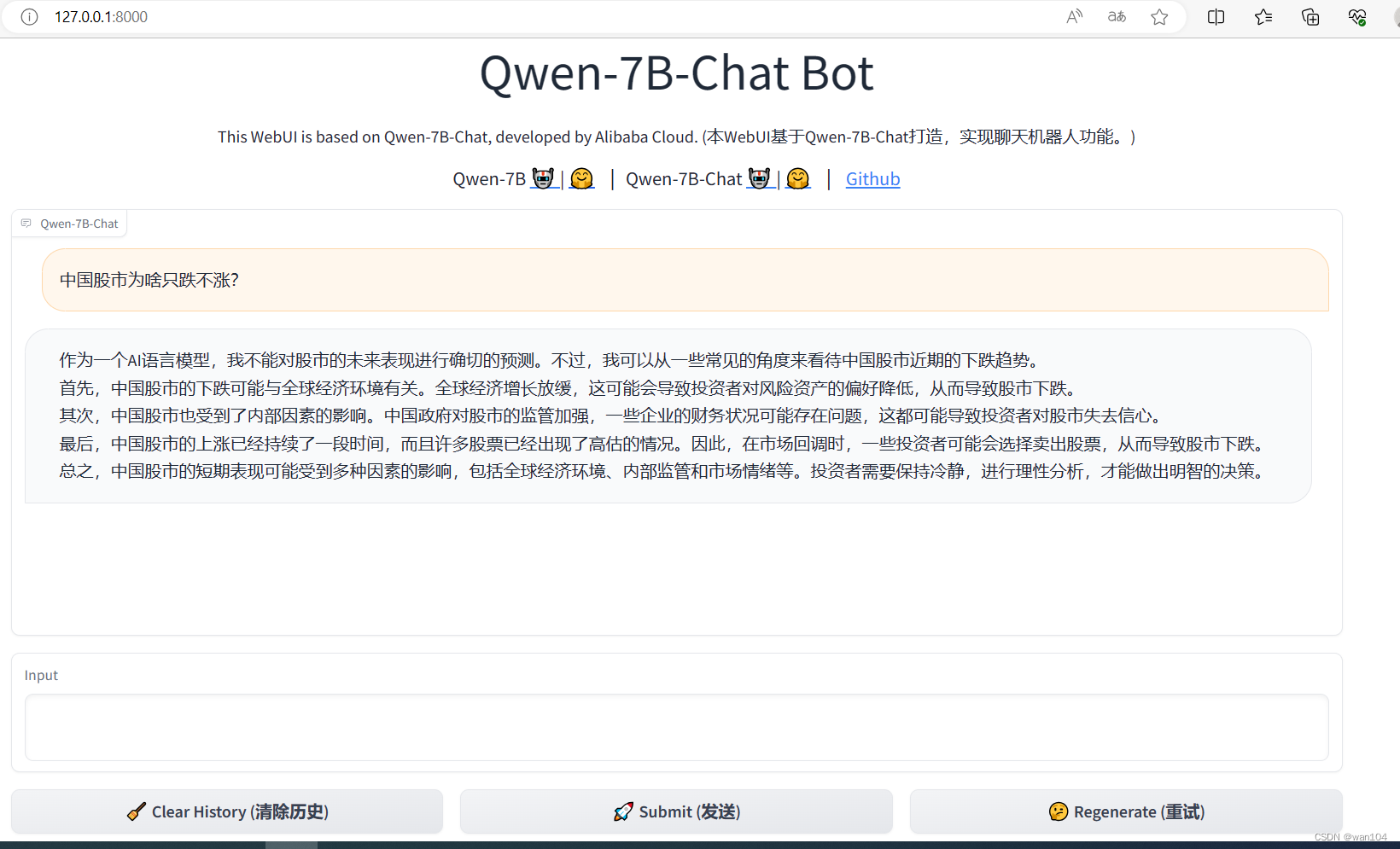

通义千问7B和7Bchat模型本地部署复现成功_通义千问 githubCSDN博客 - Chat templates are part of the tokenizer. Essentially, we build the tokenizer and the model with from_pretrained method, and we use generate method to perform chatting with the help of chat template provided by the tokenizer. Im trying to use a template to predictably receive chat output, basically just the ai to fill. From mlx_lm import generate , load from. You should also read this: Football Play Diagram Template

通义千问7B和7Bchat模型本地部署复现成功_通义千问 githubCSDN博客 - This is the reason we added chat templates as a feature. Upload images, audio, and videos by dragging in the text input, pasting, or clicking here. This is a repository that includes proper chat templates (or input formats) for large language models (llms), to support transformers 's chat_template feature. This project is heavily inspired. Im trying to use a template. You should also read this: Free Printable Label Templates Editable

AI for Groups Build a MultiUser Chat Assistant Using 7BClass Models - Chat with your favourite models and data securely. They also focus the model's learning on relevant aspects of the data. Im trying to use a template to predictably receive chat output, basically just the ai to fill. They specify how to convert conversations, represented as lists of messages, into a single tokenizable string in the format that the model expects.. You should also read this: Sop Templates Word